In this post I will continue from the previous post about centralizing xEvents based query execution stats collection and reporting. The key topics I will cover are:

1) Determine which sql instances you need to collect from and when the last collection return (to handle incremental loads throughout the day from the source servers to the central DB server)

2) Review of the xPath oriented queries that parse the .XEL files into a relational format for storage in the central DB

In my last post, I ended with discussing the XEL file’s offset functionality and how to use it within the sys.fn_xe_file_target_read_file system function to bring back a set of events “since the last poll”. This functionality is what I use to perform incremental loads of xEvent query execution data from various remote sql servers into my central database. First let’s take a look at the basic data model I am using:

DB_ServerMain has the name of the SQL Server and a flag (enablexEventQuerCollection) to denote whether it should be included in centralized collection. Straightforward enough… you can get the list of SQL instances to collect xEvents from with a query like:

Select *

from DB_ServerMain

where enablexEventQueryCollection = 1

Recall from my previous post, that we can use the file_offset value in the .XEL file to basically run an incremental load (return everything after the specified offset). In the data model presented above, you can see the XEvents_Queries table has the servername, file_name, and file_offset value (along with the query stats specifics). In order to fully automate the collection process, we need the name of the SQL Server instance, the name of the last XEL file collected from AND the highest file_offset value within that last file that we have a record of.

Select a.ServerName,isnull(xEventFile.xEventFile_Name,'NULL') as xEventFile_Name, isnull(convert(varchar(50),xEventFileOffset.xEventFile_Offset), 'NULL') as xEventFile_Offset from DB_ServerMain a OUTER APPLY (Select max(file_name) as xEventFile_Name from dbo.XEvents_Queries ws where s.servername = a.servername ) as xEventFile OUTER APPLY (Select max(file_offset) as xEventFile_Offset from dbo.XEvents_Queries ws where ws.servername = a.servername and ws.file_name = (Select max(file_name) as xEventFile_Name from dbo.XEvents_Queries ws where ws.servername = a.servername )) as xEventFileOffset

I’ll admit, it’s not the slickest code in the world, but it works to pull the servername, filename and file_offset for each instance to be monitored. The OUTER APPLY(s) help in keeping it fast enough even after you have millions of rows in xEvent_Queries. Once you know the instances you want to collect from (and the latest filename/fileoffset combination), you can connect to each SQL instance and select the contents of the .XEL files using the additional parameters in the call to sys.fn_xe_file_target_read_file() system function like this:

SELECT CAST(event_data AS XML) AS event_data_XML, file_name, file_offset INTO #Events FROM sys.fn_xe_file_target_read_file('C:\DBAMonitoring\traces\sql2012*.xel', null,'C:\DBAMonitoring\traces\sql2012_0_130295018340930000.xel', 141312) AS F

In the example above, the call will create (and fill) a temporary table called #Events with the alerttime and full event XML for all files that match the wildcard search “c:\DBAMonitoring\traces\sql2012*.xel”. It will then start with the file and file_offset we specified (sql2012_0_130295018340930000.xel and 141312) and disregard any earlier files or earlier entries within the file (based on the file_offset value).

The above query is basically the “incremental load” since the last poll, but it only pulls the raw XML event data (into an XML column) with the filename and file_offset associated with that event. In order to make sense of the XML event, it needs to be parsed using xPath functions to pull out the individual elements (e.g. reads, writes, duration, etc). The code below shows that logic:

SELECT @@ServerName as ServerName,

file_name,

file_offset,

event_data_XML.value ('(/event/action[@name=''query_hash'']/value)[1]', 'nvarchar(100)') AS query_hash,

DATEADD(mi, DATEDIFF(mi, GETUTCDATE(), GETDATE()), event_data_XML.value ('(/event/@timestamp)[1]', 'datetime' )) AS timestamp,

event_data_XML.value ('(/event/@name)[1]', 'vARCHAR(50)' ) AS EventName,

event_data_XML.value ('(/event/data [@name=''duration'' ]/value)[1]', 'int')/1000 AS duration_ms,

event_data_XML.value ('(/event/data [@name=''object_type'' ]/text)[1]', 'varchar(100)') AS object_type,

DB_Name(event_data_XML.value ('(/event/action [@name=''database_id'' ]/value)[1]', 'int')) AS DatabaseName,

CASE event_data_XML.value ('(/event/@name)[1]', 'vARCHAR(50)' )

when 'sp_statement_completed' then event_data_XML.value ('(/event/data [@name=''object_name'' ]/value)[1]', 'NVARCHAR(4000)')

ELSE OBJECT_NAME(event_data_XML.value ('(/event/data [@name=''object_id'' ]/value)[1]', 'BIGINT'),event_data_XML.value ('(/event/action [@name=''database_id'' ]/value)[1]', 'int')) END as ObjectName,

event_data_XML.value ('(/event/action [@name=''client_hostname'']/value)[1]', 'varchar(100)') as HostMachine,

event_data_XML.value ('(/event/action [@name=''client_app_name'']/value)[1]', 'varchar(100)' ) as client_app_name,

event_data_XML.value ('(/event/action [@name=''nt_username'']/value)[1]', 'varchar(100)') as nt_username,

event_data_XML.value ('(/event/data [@name=''cpu_time'']/value)[1]', 'int')/1000 AS cpu_time_ms,

event_data_XML.value ('(/event/data [@name=''physical_reads'']/value)[1]', 'BIGINT') AS physical_reads,

event_data_XML.value ('(/event/data [@name=''logical_reads'' ]/value)[1]', 'BIGINT') AS logical_reads,

event_data_XML.value ('(/event/data [@name=''writes'' ]/value)[1]', 'BIGINT' ) AS writes,

event_data_XML.value ('(/event/data [@name=''row_count'' ]/value)[1]', 'BIGINT' ) AS row_count,

CASE event_data_XML.value ('(/event/@name)[1]', 'vARCHAR(50)' )

when 'sql_batch_completed' then event_data_XML.value ('(/event/data [@name=''batch_text'' ]/value)[1]', 'NVARCHAR(4000)')

ELSE event_data_XML.value ('(/event/data [@name=''statement'' ]/value)[1]', 'NVARCHAR(4000)')

END AS statement

FROM #Events

The code above uses xPath to parse out the various elements of the XML event into usable tabular columns that match the order and structure of the xEvents_Queries table in the central database. The results look like this:

So now you have access to the reads/writes/cpu/durations as well as the statement within a larger batch or stored procedure that is using those resources. You can run these type of queries on the host in question anytime to get a real-time look at the query activity, but pulling it all into a central database (with rows marked with an @@ServerName to delineate between instances) will be a more manageable solution for environments where there is more than one instance to keep an eye on (read: any environment)

With the information from this post and previous posts, you should be able to set up an active xEvents session to capture “statement complete” events. You can then use the type of query similar to the above example to parse your captured xEvents data into easily consumable, aggregate-able (is that a word?) data sets for analysis.

I have used this type of setup on the local server to answer questions like this:

1) What are my top 5 executed tSQL statements on the server between 8:05AM and 10:30AM yesterday?

2) Which database had the most io/cpu usage for the last 6 hours? Morning vs. Afternoon for the last 3 days? etc.

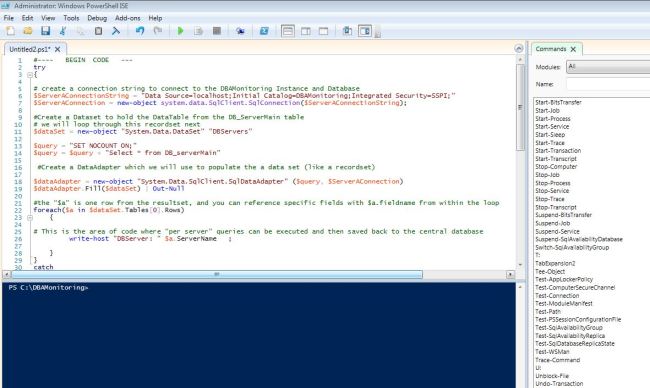

In my next post I’ll tie all of this together by going through a powershell ps1 file that has the above queries embedded into it and actually performs the write of query event rows from remote servers to the central database.